SumoBundle created an amazing video tutorial on how to turn any URL into a YouTube short using JSON2Video and Make.com.

Check the video tutorial:

Transcript of the video

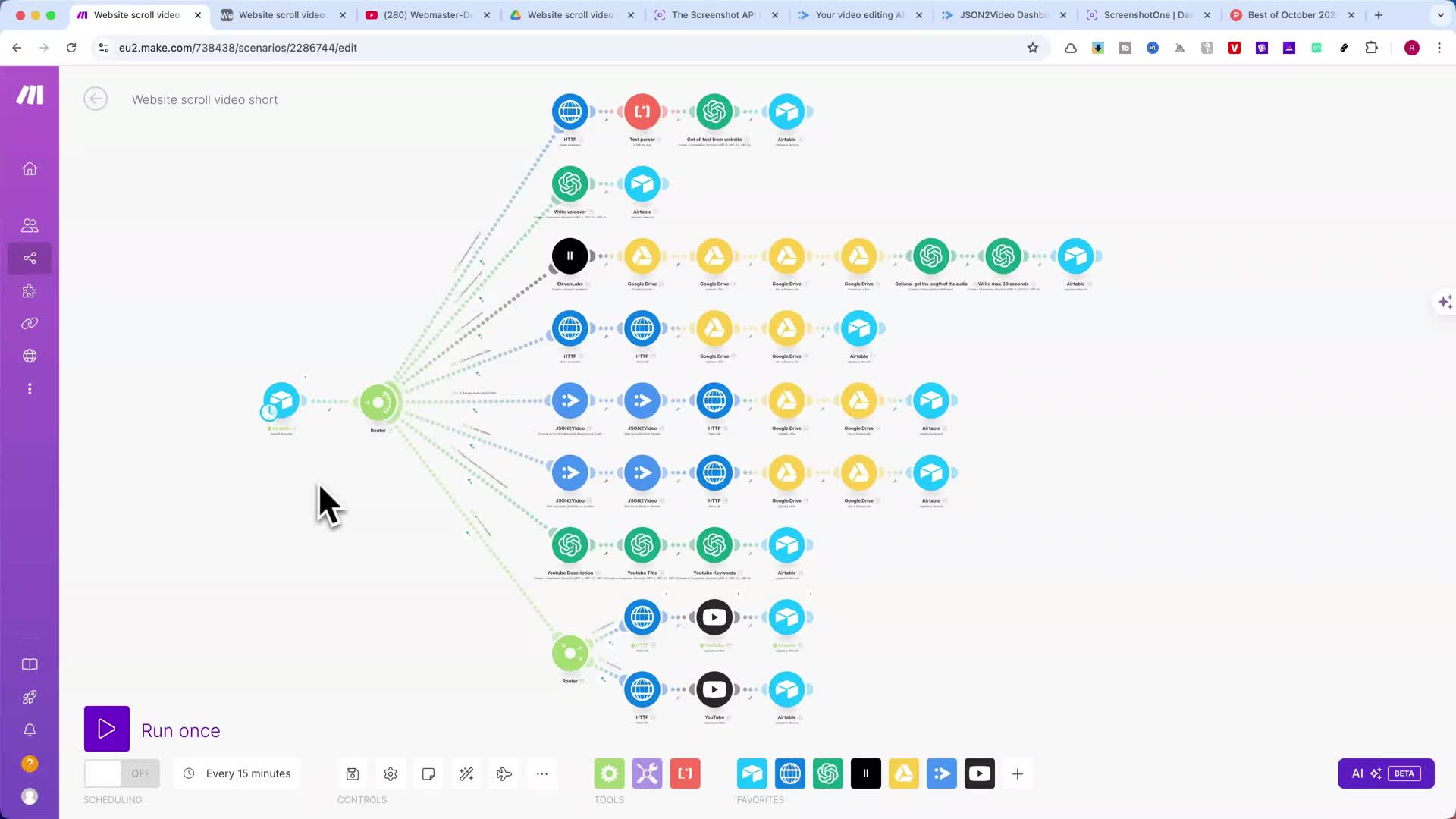

Hey there. Ever wanted to turn a website into a quick engaging video without spending hours editing? Well, in this video, I'm gonna show you an incredible automation that does exactly that. With just a website URL, this setup can generate a short high quality 30 second video in just a few minutes. Imagine this.

You provide a link, and in no time, you have a video with dynamic visuals, a professional sounding voice over, and all the metadata optimized for YouTube, all without lifting a finger. Sounds amazing. Right? Stick around, because by the end, you'll see how each part of this automation comes together to create a polished, ready to publish video. In the next sections, we'll break down each branch of the automation step by step, from grabbing content from the website, to generating the visuals and audio, all the way to publishing it on YouTube.

By the end, you'll understand how to automate your video creation process like a pro. Alright. Let's dive in. The first thing you'll need is an Airtable base. This is where we store all the data for each video, like the website link, the script, video files, and YouTube metadata.

I'll show you how the automation flows in make.comstepbystep. And don't worry, later, I'll walk you through the exact Airtable columns in make.com settings. You'll need to set this up yourself. Now, let's jump into the workflow and see how it transforms a simple URL into a polished, 32nd video. To kick off this automation, I start by adding a URL into my Airtable base.

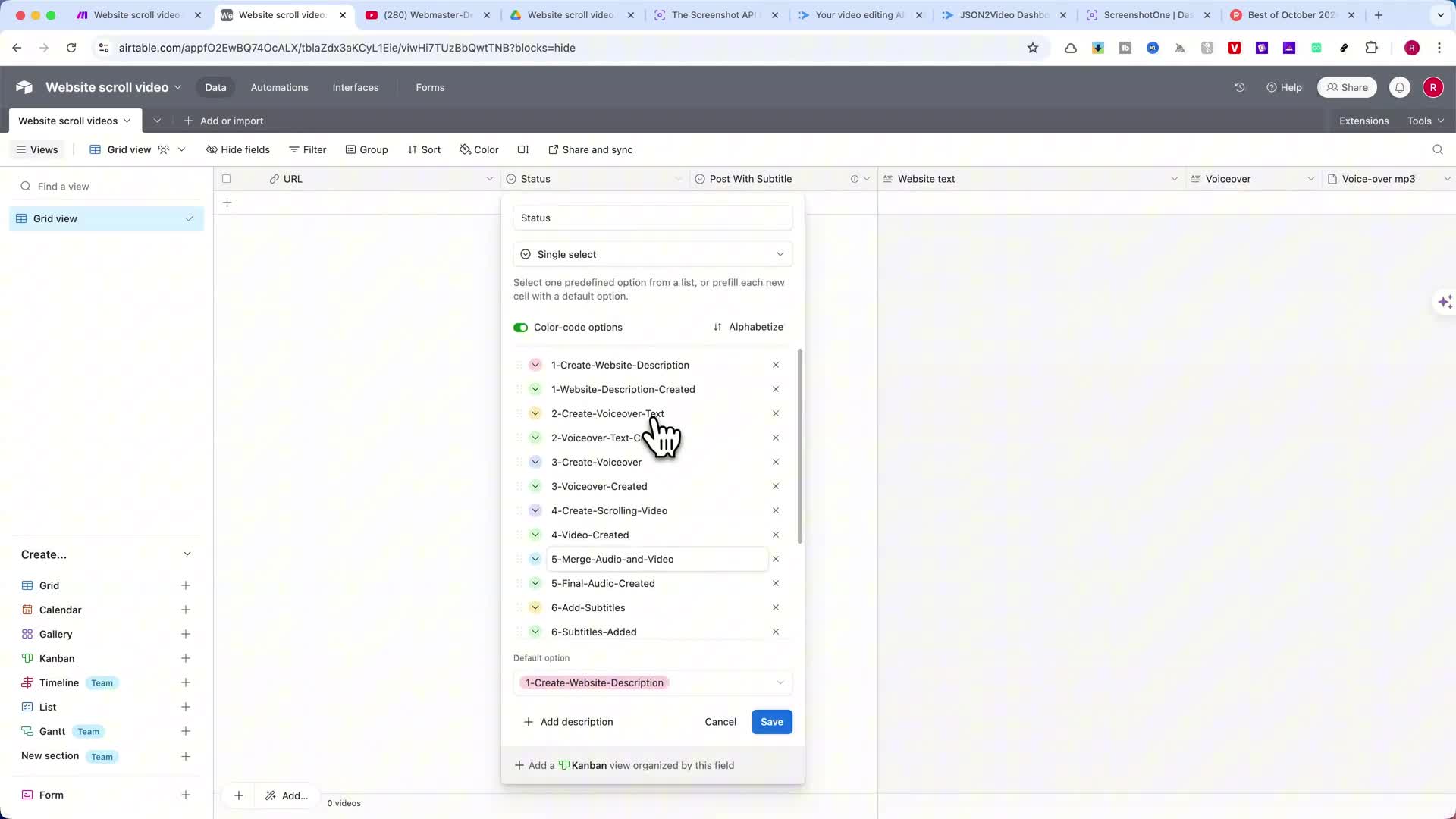

This is the link to the website I wanna turn into a video. In this example, a random link from Product Hunt, specifically for a site called feta. Io. Once the link is added, I set the status field to the first step. One create website description.

Once I add a URL in my Airtable base, I head over to make.com to run the automation. This triggers the first branch where the automation will pull content directly from the website. The first step, one create website description, grabs the main text from the URL and processes it into a description that we'll use for the video. After the description is created, the automation updates the status in Airtable to one website description created, marking this step as complete and ready for the next stage. Now that we have our website text ready, let's move on to the next part, creating the voice over text.

To do this, I set the status in Airtable to to create voice over text, and then run the automation in make.com. In this step, the automation takes the website description that we generated earlier and sends it to an AI tool, like OpenAI, to refine it into a voice over script. The script is written in a more engaging and conversational tone, perfect for narration. Once the AI completes the script, the automation saves it back in Airtable. The status is updated to 2 voice over text created, marking this task as done and ready for the next stage.

Now that our voice over text is ready, it's time to bring it to life with audio. For this step, I set the status in Airtable to 3 create voiceover, and run the automation in make.com. In this step, the automation sends the prepared script over to 11 Labs, a text to speech tool that generates a realistic sounding voice over. 11 Labs uses advanced AI to create natural professional audio from our script, giving our video a polished narration. Once 11 Labs generates the audio, the automation automatically saves the file to my Google Drive.

This makes it easy to access and organize all the audio files in one place, ready for the next stages of video production. After the audio is saved, the status is updated in Airtable to 3 voice over created. This signals that the voice over is complete and ready to be added to the video visuals. Now let's check out the audio we just created. Now that our voice over you've gotta check this out right now.

Feta is the ultimate tool. You've gotta check this out right now. Feta is the ultimate tool for product and engineering teams to level up their meetings. Imagine effortlessly capturing insights, managing tasks, and keeping everyone in sync. With Feta, you'll save over 45 minutes per meeting and never miss a crucial ready?

Let's move on to creating the visuals for the video. For this step, I set the status in Airtable to 4 create scrolling video and run the automation in make.com. The automation uses the screenshot one API to capture a scrolling screenshot from the website URL. This creates the effect of smoothly moving through the page, perfect for showcasing the website's content in a dynamic way. The scrolling screenshot is saved to Google Drive, keeping all visual assets organized and accessible for the next step.

Once the scrolling screenshots are complete and saved, the status in Airtable updates to for video created, marking this step as finished. Let's take a look at the scrolling screenshots we captured. For this next part, it'll take a few seconds for the video to generate. You'll soon see a smooth scrolling animation of the web page in action. If you're interested in customizing this further, I recommend exploring the screenshot 1 dashboard.

By creating a free account, you'll get 100 screenshots per month to play with. Inside the dashboard, you'll find a variety of animation options and settings to make your scrolling visuals more dynamic and unique. It's a great way to add a personal touch to your automated videos. Now that we have our scrolling visuals and voiceover ready, it's time to bring everything together into a single video. For this step, I set the status in Airtable to 5 merge audio and video, and run the automation in make.com.

The automation uses jason2video, a powerful tool that merges the audio and scrolling visuals into one seamless video. Jason2video does all the heavy lifting, syncing the narration with the visuals to create a smooth, professional result. And here's the best part. JSON 2 video can do so much more than just merging files. It's capable of adding custom animations, text overlays, and more, which I'll cover in a future video.

But for now, it's giving us exactly what we need, a clean, polished video with synchronized audio and visuals. Once JSON 2 video finishes processing, the final video is saved to my Google Drive, and the status updates in Airtable to 5 final video created. Let's check out the video we just created. You've gotta check this out right now. FADA is the ultimate tool for product and engineering teams to level up their meetings.

Imagine effortlessly capturing insights, managing tasks, and keeping everyone in sync. With Feta, you'll save over 45 minutes per meeting and never miss a crucial detail again. Plus, subscribe today and snag 3 months free. Already love another tool? No problem.

You can still enjoy Feta for free if you have a few months left on your current subscription. With our final video ready, let's move on to an optional but valuable step, adding subtitles. For this, I set the status in Airtable to 6 add subtitles, and run the automation in make.com. Using Jason 2 video again, the automation adds subtitles to the video based on the voice over script. Subtitles make the video more accessible, allowing viewers to follow along even without sound.

Once the subtitles are embedded, the updated video file is saved to Google Drive, and the status in Airtable updates to 6 subtitles added. This step is optional, so if you prefer videos without subtitles, you can skip it. But adding them can make a big difference for accessibility and engagement, especially on social media. Time to check out the finished video. You've got to check this out right now.

Theta is the ultimate tool for product and engineering teams to level up their meetings. Imagine effortlessly capturing insights, managing tasks, and keeping everyone in sync. With Feta, you'll save over 45 minutes per meeting and never miss a crucial detail again. Plus, subscribe today and snag 3 months free. Already love another tool?

No problem. You can still enjoy fed With our video complete, it's time to make it YouTube ready by creating the metadata. For this, I set the status in Airtable to 7 create YouTube title description keywords, and run the automation in make.com. The automation uses OpenAI to generate a catchy title, a detailed description, and relevant keywords for YouTube. These elements are essential for boosting the video's visibility and reaching the right audience on the platform.

Once the metadata is created, it's saved back into Airtable and marked as complete with the status, 7 YouTube title description keywords created. This way, all the information is ready and waiting for the final upload step. With optimized metadata, our video is fully prepped for YouTube. In the final branch, we'll upload the video and publish it online. We've reached the final step, publishing the video on YouTube.

With our video file and metadata ready, I set the status in Airtable to 8 upload to YouTube, and run the automation in make.com. The automation uploads the final video file along with the title, description, and keywords directly to one of my old YouTube channels. This makes the process quick and seamless, ensuring the video is published with all the optimized metadata we generated earlier. Once the video is live, the automation saves the YouTube link back into Airtable, updating the record with the published video's URL for easy reference. The status in Airtable is now set to 8 video published, marking the entire process as complete.

If you'd like to see some examples of the videos created through this automation, feel free to check out a few videos on this channel. It's a great way to see the power of the setup in action. And that's it. From a single URL to a fully produced published video in just a few automated steps. You've got to check this out right now.

Feta is the ultimate tool for product and engineering teams to level up their meetings. Imagine effortlessly capturing insights, managing tasks, and keeping everyone in sync. With Feta, you'll save over 45 minutes per meeting and never miss a crucial detail. If you're enjoying this video so far, a quick like would really help me out. Now, let's get into how I made this automation.

I'm assuming you already have a basic understanding of setting up an Airtable base, and making connections in make.com. For a simpler setup, you can grab both the Airtable base and the full automation blueprint from the first link in the description. I've noticed that many of you who've purchased my previous automations have shared some fantastic results, so thank you for that. Also, if you haven't yet, be sure to check out my last 2 big automation videos on this channel. Once you download the JSON blueprint and import the Airtable base into your account, all that's left to do is connect your own API keys and accounts to each module.

Here's how to import the blueprint. Create a new automation, browse for the file, and click import. It's that easy. Of course, if you'd prefer to build the automation from scratch, that's awesome too. In the next steps, I'll walk you through the key elements of the setup, from the prompts I used to the settings adjustments needed for certain modules.

If you've watched my previous tutorials, this should feel pretty familiar. Alright. Let's dive into the details so you can see exactly how each part of this automation works. Let's set up our Airtable base with the following columns to manage each step of our automation. URL.

This URL field stores the link to the website we'll convert into a video. Status. This single select field controls each automation step with options like one create website description. Feel free to pause the video to view all the options. Post with subtitle.

A single select field to indicate if the video will have subtitles. I recommend enabling subtitles for better accessibility. Website text. This long text field will store the extracted content from the website, which will later become the voice over script. Voice over text.

Another long text field where the refined AI generated voice over script is saved. Voice over audio MP 3, an attachment field that stores the link to the generated audio file. Time stamps, This long text field holds the audio transcript with time stamps. Useful if you're managing audio and video lengths manually. Audio seconds.

A number field for the audio length. If it's over 30 seconds, the value here caps at 30. This helps if you want to extend the automation for longer videos. Scrolling video, an attachment field for the link to the scrolling video visuals. Final video.

Once Jason 2 video merges the visuals and audio, the link to the final video is stored here. This is also an attachment field. Final video with subtitles. If subtitles are enabled, the final video with subtitles is stored here ready for YouTube. YouTube title, a long text field for the AI generated optimized title.

YouTube description, Another long text field to store the AI generated video description. YouTube keywords. This long text field contains the keywords to boost video reach. YouTube URL. This final URL field stores the link to the published YouTube video.

Note, it may take a few minutes for this link to become active after upload. With these columns in place, your Airtable is all set. Now, let's move on to connecting these fields in make.com to bring the automation to life. Let's jump into the first branch where we create the website description. This part starts with the search records module from Airtable, which pulls in the URL and status from our database.

Make sure to pause the video here and select the correct Airtable base and table so that the automation pulls the right records. Once we have the records, we move through the following steps. Filter setup. To ensure each step only runs at the right time, I've set up a filter. Here, we're filtering based on the status field being set to one create website description.

You'll only need to set up this kind of filter once, but it's essential for controlling the flow of the automation. HTTP request. Next, we use the HTTP module to make a request to the URL. This fetches the website's HTML content, so that we can extract the main text. Text parser.

The HTML content is then passed through a text parser module to convert it into readable text. This strips away all the unnecessary HTML, leaving us with just the main content we wanna use. Open AI module. Now, we send the extracted text to open AI to generate a clear engaging website description. I'm going to pause the video here to show you the exact prompt I used to make sure we get a well structured output.

Feel free to copy or modify this prompt based on your needs. Updating Airtable. Finally, we update our Airtable record with the generated description in the website text field, and set the status to one website description created. This ensures the automation is ready to proceed to the next branch. And that wraps up the first branch.

In the next step, we'll take this description and turn it into a voice over script. Moving on to the 2nd branch where we turn the website description into a voice over script. This branch starts after the first is complete, and has its own filter just like each part of this automation. The filter here checks if the status in Airtable is set to, to create voice over text. Setting up filters like this ensures each branch only runs when it's supposed to, keeping everything organized.

Here's how the steps work in this branch. Write voice over. We start by sending the website description to OpenAI to generate a voice over script. The AI takes the description text and rephrases it into a conversational tone, perfect for narration. I'll pause here briefly so you can see the prompt I used in OpenAI.

Feel free to adapt it to suit your style. Update Airtable. Once the voiceover script is generated, we save it back into Airtable in the voiceover text field. The status is also updated to 2 voiceover text created, signaling that this step is complete and ready for the next stage. And that's it for branch 2.

Now we have a polished voice over script, and we're ready to move on to the next branch, where we'll actually generate the audio. In this third branch, we transform our voice over text into audio and prepare it for the video. Like the other branches, this one also has a filter to check that the status is set to 3 create voiceover. This way, the automation only proceeds when the previous steps are complete, and everything's ready. Let's go through each step.

11 Labs speech synthesis. 1st, we use 11 Labs to turn our voiceover script into natural sounding audio. 11 Labs generates the audio file, making it sound professional and engaging. Google Drive, folder and file upload. Next, the audio file is uploaded to Google Drive, where it's organized into a specific folder for easy access.

We create a folder if it doesn't already exist, and the file is stored there for later use. Google Drive. Get share link. After uploading, we create a shareable link for the audio file. This ensures we can easily reference or access the audio file in future steps.

Optional. Get audio length. To keep track of the audio length, we send the file to OpenAI's whisper model, which transcribes it and returns the duration. This is optional, but useful if you're aiming for strict timing, such as keeping videos within a set length. Optional.

Right max 30 seconds. Based on the audio length, the automation can also limit the script to a maximum of 30 seconds if needed. This step uses open AI again to generate a shorter script if the audio exceeds our target length. It's optional but handy if you want flexibility with different video lengths. Update Airtable.

Finally, we update our Airtable record with the link to the audio file in voice over audio MP 3, and update the status to 3 voice over created, signaling that the step is complete. With the audio file ready and stored, we're all set to add the visuals in the next branch. In this 4th branch, we create the scrolling screenshots that will serve as the visuals for our video. This branch also has a filter to ensure it only runs when the status is set to 4 create scrolling video, keeping everything in sync. Here's how each step works.

HTTP request. We start by sending a request to screenshot one's API. This API generates a series of scrolling screenshots of the website, giving us dynamic visuals that move through the site's content. HTTP, get a file. Once the screenshots are ready, we retrieve the file through another HTTP module.

This step ensures we have the actual images needed for the video. Google Drive. Upload file. Next, the screenshots are uploaded to Google Drive, where they're stored and organized for easy access in the next steps. Google Drive, get share link.

After uploading, we generate a shareable link to the screenshots, making it simple to reference and include them in the final video. Update Airtable. Finally, we update our Airtable record with the link to the scrolling screenshots in the scrolling video field, and set the status to for video created. This completes the branch, preparing everything for the audio and visuals to be merged in the next step. With the scrolling visuals now ready, we're all set to move on and combine them with the voiceover in the following branch.

In this 5th branch, we bring everything together by merging the audio and scrolling screenshots into a final video. As with each branch, there's a filter here to ensure it only runs when the status is set to 5 merge audio and video, keeping the workflow smooth and controlled. Here's a breakdown of each step. JSON 2 Video. Concatenate audio and video.

We start with JSON 2 Video, a powerful tool that merges our audio with the scrolling visuals. JSON 2 video syncs the narration with the visuals, giving us a professional looking video. This tool can do so much more, like adding animations and overlays, but we'll keep it simple here. I'll cover those advanced features in a future video. JSON 2 video.

Wait for render. After initiating the merge, we use a wait for render module to ensure Jason 2 video has finished processing the video. This guarantees that we only move forward once the video file is complete and ready. Http. Get the file.

Once the video has finished rendering, we use an htp module to retrieve the final video file from Jason 2 video. Google Drive upload file. Next, we upload the final video to Google Drive for storage and easy access. Google Drive get share link. We then generate a shareable link for the final video, making it accessible for publishing or sharing.

Update Airtable. Finally, we update our Airtable record with the link to the merged video in the final video field, and set the status to 5 final video created. This signals that the video is now ready for optional subtitling in the next branch. And that's it. We now have a completed video with synced audio and visuals.

In the next step, we'll add subtitles if needed. In this 6th branch, we add subtitles to our video, making it more accessible and engaging for viewers. This step is optional. And like the previous branches, it has its own filter to ensure it only runs when the status is set to 6 add subtitles. Let's walk through each part of this branch.

JSON 2 video. Add automatic subtitles. First, we use JSON 2 video to automatically add subtitles to the video based on the voiceover script. This helps make the content accessible to those watching without sound, or who may prefer to read along. JSON 2 video.

Wait for render. After the subtitles are added, we use a wait for render module to ensure JSON 2 video has finished processing the video with subtitles before moving forward. HTTP. Get the file. Once the video with subtitles is ready, we retrieve the final file through an HTTP module.

Google Drive upload file. We then upload the video with subtitles to Google Drive, storing it alongside the original version for easy access. Google Drive, get share link. A shareable link for the subtitled video is generated, making it easy to reference and publish. Update Airtable.

Finally, we update our Airtable record with the link to the subtitled video in the final video with subtitles field, and set the status to 6 subtitles added. With the video and subtitles complete, we're almost ready to publish. In the next branch, we'll create the metadata and prepare it for upload. Now we're on to the 7th branch where we create the YouTube metadata to help the video reach the right audience. This branch has its own filter to ensure it only runs when the status is set to 7 create YouTube title description keywords.

Here's how each module works in this branch. YouTube description. We start by using OpenAI to generate an engaging YouTube description based on the video content. This description summarizes the video and helps viewers understand what it's about, which is essential for drawing in the right audience. YouTube title.

Next, we create a catchy optimized title using another OpenAI prompt. This title is designed to capture attention and improve searchability on YouTube, making it easier for viewers to find the video. YouTube keywords. Finally, we use OpenAI to generate a list of relevant keywords that will help the video rank in YouTube search results. These keywords are critical for reaching viewers interested in the video's topic.

Update Airtable. After generating all the metadata, we save it back into Airtable in the YouTube title, YouTube description, and YouTube keywords fields. We also update the status to 7 YouTube title description keywords created, signaling that we're ready for the final step. With the metadata complete, we're all set to publish the video. In the next and final branch, we'll upload everything to YouTube.

We've made it to the final step, publishing the video on YouTube. This 8th branch has 2 routes, allowing us to upload either the video with subtitles or the one without, depending on your preference. Each route has its own filter to ensure the correct video version is uploaded based on the status setting in Airtable. Here's how each route works. Route 1, upload video with subtitles.

HTTP get file. 1st, we retrieve the video with subtitles from Google Drive. YouTube, upload video. Next, the video file is uploaded to YouTube along with the title, description, and keywords we created earlier. Update Airtable.

Once the upload is complete, the video link is saved back into Airtable in the YouTube URL field, and the status is updated to 8 video published. Route 2, upload video without subtitles. HTTP get file. In this route, we retrieve the version of the video without subtitles. YouTube, upload video.

This video file is then uploaded to YouTube with the same metadata. Update Airtable. Finally, the video link is saved back into Airtable, and the status is set to 8 video published. With this setup, the video is now live on YouTube, and you have the option to share it with or without subtitles. And that's it.

From a simple URL to a fully published video, all done through automation. Thanks for following along, and don't forget to check out some of my other automation tutorials for more tips and tricks.

Published on November 18th, 2024